Meta learning system

This part is based on Lilian Weng’s post meta rl.

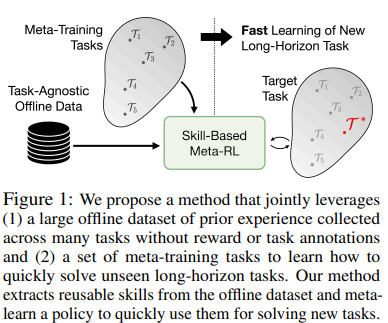

Meta RL aims to adopt fast when new situations come.

To make it fast for RL, we introduce inductive bias in the system.

In meta-RL, we impose certain types of inductive biases from the task distribution and store them in memory. Which inductive bias to adopt at test time depends on the algorithm.

In the training setting, there will be a distribution of environments. Agents need to decide which inductive bias to use when encounter new environment.

The outer loop trains the parameter weights u, which determine the inner-loop learner (’Agent’, instantiated by a recurrent neural network) that interacts with an environment for the duration of the episode. For every cycle of the outer loop, a new environment is sampled from a distribution of environments, which share some common structure.

The outer loop trains the parameter weights u, which determine the inner-loop learner (’Agent’, instantiated by a recurrent neural network) that interacts with an environment for the duration of the episode. For every cycle of the outer loop, a new environment is sampled from a distribution of environments, which share some common structure.

The inductive bias could be hyper-parameters, learned models, loss functions etc. We can also borrow ideas from “Episodic Control” (Botvinick, et al. 2019)

When a new situation is encountered and a decision must be made concerning what action to take, the procedure is to compare an internal representation of the current situation with stored representations of past situations. The action chosen is then the one associated with the highest value, based on the outcomes of the past situations that are most similar to the present.

Meta RL system is composed of Model with Memory, Meta-learning algorithm, and A distribution of MDPs.

Potential Algorithm we can look into

Episodic Control: MFEC (Model-Free Episodic Control; Blundell et al., 2016)

NEC (Neural Episodic Control; Pritzel et al., 2016)

Episodic LSTM (Ritter et al., 2018)

Automatic generating reward and train skills.

Gupta et al. (2018) Eysenbach et al., 2018

idea: pick z as skill, generate policy conditioned on z, and generate discriminator q of z conditioned on s. The objective is to minimize mutual info between states and skills, maximize mutual info between action and skills and policy entropy.

Unsupervised RL goal generate

Pretraining RL

- Entropy based maximum entropy to encourage explore

- Skill based skill

- Knowledge based not many resources knowledge based

Ideas about our projects

H1 could be tested by constructing such a action space and see what behaviors will emerge.

- A={task action, play action}

Since we plan to adopt a meta-learning algorithms, it is no need to test H2, H3, H4.

Turn external reward to intrinsic reward?

- To test proximate cause: turn fun to intrinsic reward: when I push rock into the ground, I feel intrinsic reward - fun.

- To test ultimate cause, Meta learning framework will allow good performance in dynamic environment.