Authors

Chenyi Li, Sreejan Kumar, Marcelo Mattar

Abstract

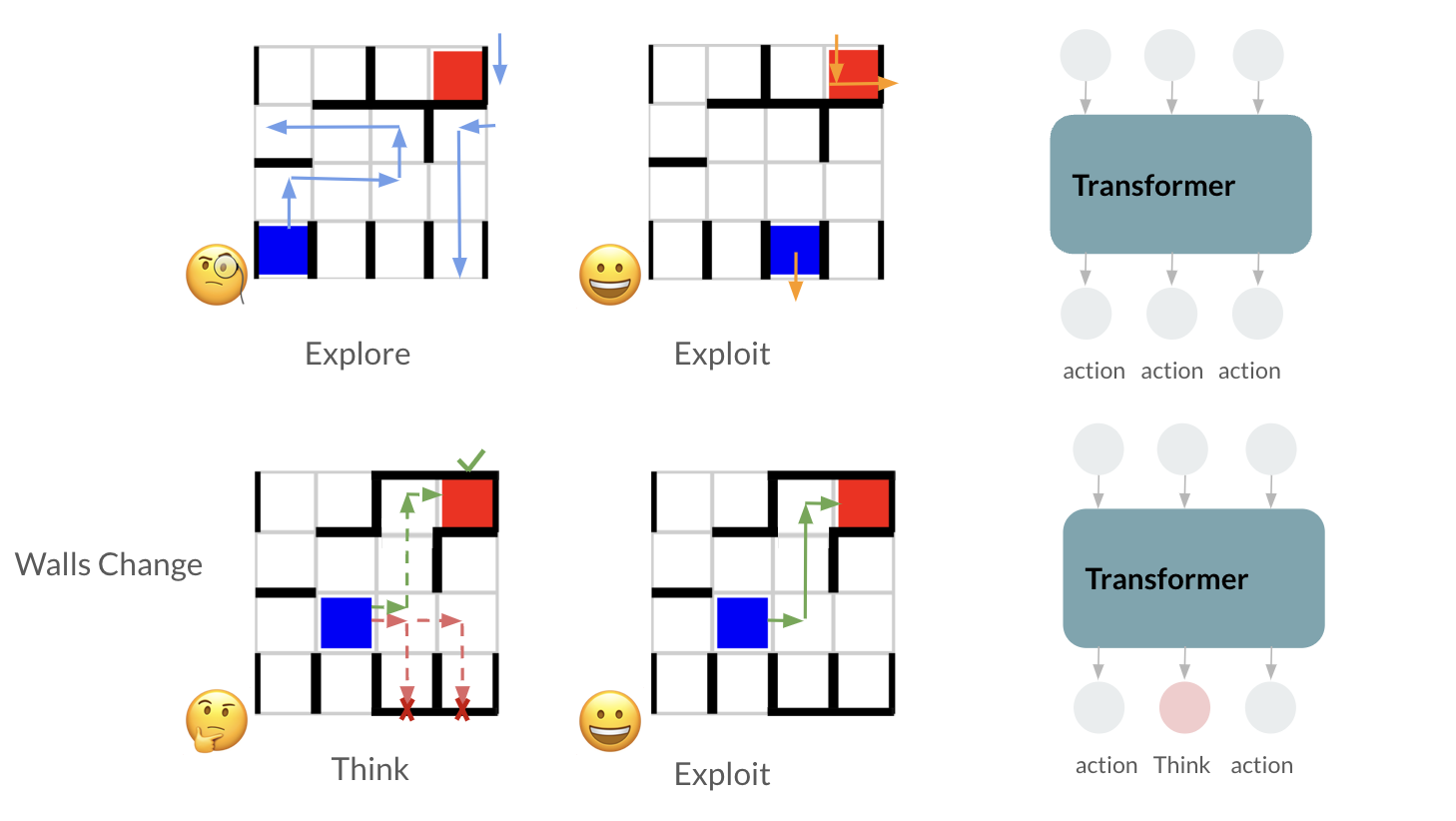

Humans quickly adapt to new situations by learning the underlying rules. This flexible adaptation is driven by meta-learning cognition, which is the ability to learn how to learn. Previous studies have achieved meta-reinforcement learning using RNN-based agents with planning capabilities, which enable them to determine when and how to perform future rollouts. Recently, many studies have claimed emergent cognitive abilities in transformer-based large language models (LLMs). However, it remains unclear how transformer-based agents achieve meta-reinforcement learning. In this research, we treat meta-reinforcement learning as a sequential modeling problem and develop a transformer agent where meta-learning emerges naturally through in-context learning. The agent predicts the optimal action based on the current state and in-context data. We apply this agent to a 4x4 recurrent spatial navigation task to demonstrate that it can achieve one-shot learning. The transformer agent exhibits an explore-exploit pattern in the maze navigation task. During the exploration phase, it explores nearly all positions in the maze. In the exploitation phase, it quickly navigates to the reward position while avoiding walls. Remarkably, after just one trial, the agent’s reward increases, and the steps to the goal decrease to nearly optimal levels. Our work underscores the potential of transformers in achieving meta-learning and marks a significant advancement by showcasing one-shot learning in a transformer agent. These findings suggest that transformers could significantly advance cognitive modeling and artificial intelligence, offering new insights into rapid and effective learning processes.